Open letter to Waterfront Toronto, City of Toronto Council, Mayor John Tory, Minister of Education Stephen Lecce, and the Premier of Ontario, Doug Ford, on the implications of “Data Creep in Schools and Daycares in Waterfront Toronto’s Quayside.”

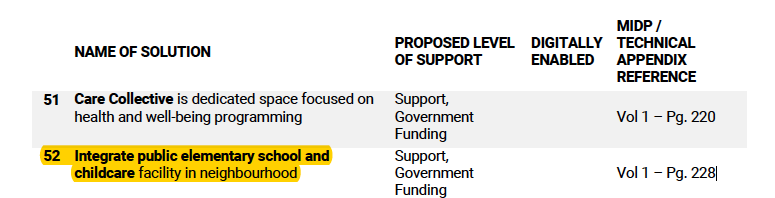

The just released Quayside Discussion Guide, produced for Waterfront Toronto’s MIDP Evaluation Consultation, February 2020, Round 2, has one very troubling “solution” listed in the Complete Communities and Inclusivity section:

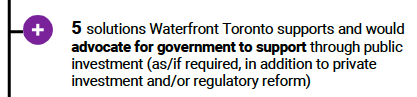

Waterfront TO’s categorizes the integration of a “public elementary school and childcare facility” in Quayside as a solution it supports if there is government support:

Waterfront Toronto’s failure to recognize the potential for the violation of children’s data privacy in these two physical domains, digital AND physical, is alarming.

First. Currently, under the Ontario Education Act, publicly funded schools are not considered spaces “that are open to the public“, ie. public spaces. The question of whether schools are public places was raised before the Human Rights Commission in Fall 2017 in regards to Kenner Fee, an autistic boy who hoped to have his service dog in the classroom. The Waterloo Board’s lawyer, Nadya Tymochenko, stated, “The school is not a public space,” and “The classrooms in a school are not publicly accessible.’

“Our legislation recognizes the need to secure the physical safety of our children and restrict public access as to anyone entering a school. Period. Why data collection broadly framed here would be permissible, is a mystery. If data is strictly to do with utilities and infrastructure, water, electricity, temperature, that seems feasible and valuable. Any data collection beyond that opens up the potential for surveillance creep for our most vulnerable residents. That data here is undefined is not acceptable.” (Tymochenko.)

As to the casual inclusion of child care facilities, more alarms sound. If childcare facilities are privately funded, will this be an opt in option for private businesses that serve children? That’s leaving aside data privacy precarity again, given Google’s history of collecting of children’s personal information.

As I have noted elsewhere, there is no logical basis to trust that Sidewalk Labs will consistently adhere to whatever regulations are in effect. The lack of recognition in the Waterfront Toronto Quayside Discussion Guide as to the vulnerability of minors leaves open the potential for what Rob Kitchin has termed the phenomenon of “control creep.”

Kitchin’s work has documented how Smart City infrastructures “are promoted as providing enhanced and more efficient and effective city services, ensuring safety and security, and providing resilience to economic and environmental shocks, but they also seriously infringe upon citizen’s privacy and are being used to profile and socially sort people, enact forms of anticipatory governance, and enable control creep, that is re-appropriation for uses beyond their initial design” (2015, italics mine).

These concerns as to whether Alphabet subsidiary companies will rigorously respect data privacy and forego data tracking continue to be significant given the new Feb. 20, 2020 charges brought against Google by the Attorney General of New Mexico, Hector Balderas, that Google is collecting the data of minors via its suite of ed-tech apps and services, Chromebooks, G-Suite, Gmail, and Google Docs. If proven, this will be the second time Google has knowingly collected children’s data via its ed-tech, in violation of COPPA, the Children’s Online Privacy Protection Act. (See other violations as to collecting children’s data). Although Google has now committed to a phasing out of third-party cookies that enable data tracking by 2022, Google’s “Privacy Sandbox” regulations will not stop its own data collection.

We should be very concerned as to the scope and scale to which Google has already colonized our children’s futures, via its dominance in the ed-tech space, the entertainment space (Youtube Kids), and the really unfathomable extent of its dynamic, persistent, digital profiling of users’ organic online behaviour.

What possible options do we have to counter “data creep”?

First, remove this “solution” from the existing agreement until we have better protections for minors in Canada, which are inadequate.

Second, look to the two significant regulations now impacting Google, Youtube Kids, and tech platforms that serve child-directed content.

The first is a Nov. 22, 2019 FTC requirement directed to Youtube and YouTube Kids that all content “directed to children” be tagged as such, that viewers of that content cannot be tracked with persistent identifiers, and that all other COPPA regulations must be met. This requirement effectively requires YouTube Kids to self-regulate as to proper compliance of the users of its platforms and content creators globally are “scrambling” as to how to avoid possible violations and financial penalties.

The second is the new UK “Age Appropriate Design Code” brought forward by the Information Commissioner’s Office that applies to all digital media companies and platforms and requires that harmful content be blocked from minors. Let me quote in full:

““There are laws to protect children in the real world. We need our laws to protect children in the digital world too.’– UK Information Commissioner

Today the Information Commissioner’s Office has published its final Age Appropriate Design Code – a set of 15 standards that online services should meet to protect children’s privacy.

The code sets out the standards expected of those responsible for designing, developing or providing online services like apps, connected toys, social media platforms, online games, educational websites and streaming services. It covers services likely to be accessed by children and which process their data.

The code will require digital services to automatically provide children with a built-in baseline of data protection whenever they download a new app, game or visit a website.

That means privacy settings should be set to high by default and nudge techniques should not be used to encourage children to weaken their settings. Location settings that allow the world to see where a child is, should also be switched off by default. Data collection and sharing should be minimized and profiling that can allow children to be served up targeted content should be switched off by default too.” (Jan. 22, 2020.)

We do not have this degree of data protection for minors in Canada, let alone adults. We should be vigilant as to not simply granting access to children’s data as a bullet point “solution” without any regard or attention to what that could mean in the future. We should be demanding regulation at the federal level that can impose significant and meaningful financial penalties and operational restrictions for all violations of children’s data privacy.

As I have said before, if we can’t effectively protect children’s data privacy, we should assume that data privacy for 13+ is functionally non-existent. Every adult living today who has spent time online has a dynamic, persistent, constantly updating targetable profile. Do we want this for our children? As adults and parents, we need to demand much more rigorous and punitive regulations, because if we don’t, it won’t happen and there will be no limits to “data creep.” In the US and the UK, outcry and pressure from parents, the media, and children’s privacy advocates, such as The Campaign for a Commercial-Free Childhood, are producing results. We need similar activism in Canada.

See my earlier post, “We Street Proof Our Kids. Why Aren’t We Data-Proofing Them?“, originally published on The Conversation.

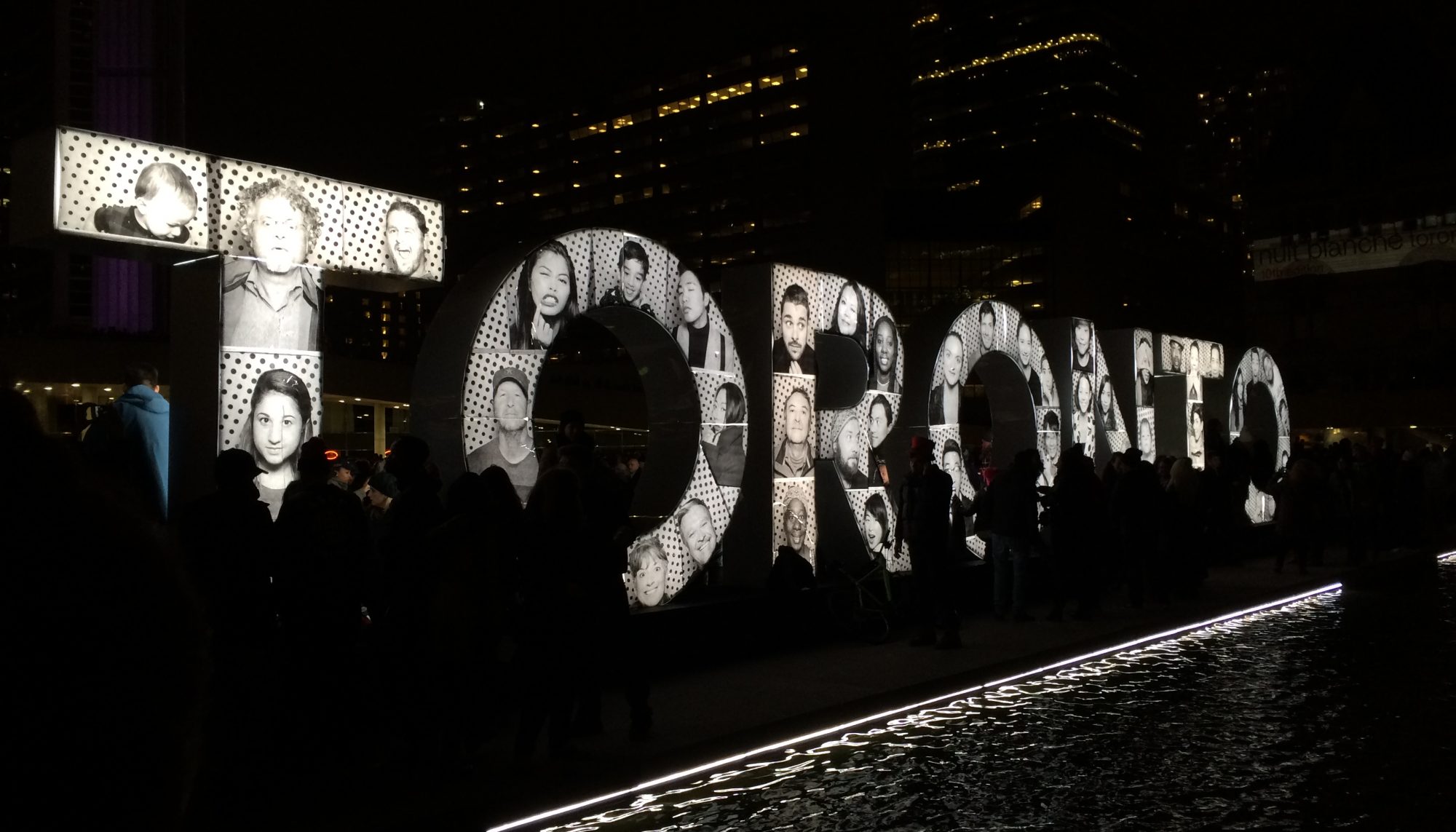

Top Photo Credit: Neonbrand on Unsplash