Big Tech Accountability and What We Can Do About It

I was invited to give a talk, “Children Not Profits,” to parents, students, and educators at the Dr. Denison High School in Newmarket in April 2024 on the dangers of our current online environment for minors and what parents and caregivers can do to demand effective regulation from our governments. I’m sharing this at the start of the school year while many parents and caregivers will be wrestling with the new cellphone bans. It may be helpful to talk with teens about the power asymmetry that is integral to the design of social media, apps, and “very large online platforms” (the term now used in the EU).

In brief, we interact unknowingly with interfaces and user experiences that are addictive by design and which leverage highly sophisticated manipulation techniques, the latter now studied as dark patterns or dark deceptions. that trick you into intended actions. In addition, if you see sponsored, recommended, or “push” content in your apps and on webpages, you’re seeing the end product determined by your dynamic digital profile as targeted by thousands of data brokers selling your personal data to third parties. This 2018 keynote by Dr. Johnny Ryan speaking to European broadcasters at EGTA CEO’s Summit in Madrid is an excellent primer on ad-targeting and note that this is 6 years old.

In the discussions I’ve had with teens and parents, no one is aware of the degree of this power imbalance and why should we be? Given that very large online platforms are amongst the wealthiest companies globally, the money available for ongoing research, testing, and refinement of these techniques means that we are unprotected, constantly monitored, and unable to detect or respond to the constant updating of these systems’ features. How can any parent or teen stay abreast of what operates as design features “to enhance your experience”?

The talk I gave in the spring detailed the current state (spring 2024) of the online world as to data tracking, digital profiling, and content targeting, monitoring search, keystrokes, click-throughs, time spent and more. We don’t think about the scope or scale of this data sharing infrastructure when we see adds pop-up on a web page. And in years of asking students and audiences, who here reads the Terms of Service, Terms of Use, or Privacy Policies, a very small number have said yes. I’ve also asked students in my Data Privacy in Canada course, how many use fake birthdays to create social media accounts before they turned 13. The answer is almost always unanimous with many sharing that they did so as early as age 11 and 10.

Until more recently, the minimum age of 13 for social media platforms was unquestioned as a de facto age-gate across online platforms. This began to change with whistleblower Frances Haugen’s disclosure of thousands of internal Facebook documents to the Wall Street Journal, detailing the tech company’s prioritizing of profit before public good and the company’s awareness of known harms to tween and teen girls. The WSJ series published details in Sept. 2021 in the series, the Facebook Files and Haugen then testified before the Senate Commerce, Science and Transportation Subcommittee on Consumer Protection, Product Safety and Data Security on Capitol Hill, in October 2021.

Haugen’s revelations arguably were the catalyst for bi-partisan concern as to the harms minors were experiencing online, in what in 2021 was a largely unregulated for-profit environment, with the exception of California’s more stringent laws introduced in 2020 with the California Consumer Privacy Act (CCPA). Many states have since passed or proposed more restrictive laws protecting minors.

Consider that the age-gate of 13 has meant that for more than a decade minors have had their data profiled and processed exactly as if they were adult accounts. In 2024, the degree of harms is now well-documented. In April 2022, the nonprofit organization, FairplayForKids.org published Designing for Disorder: Instagram’s Pro-Eating Disorder Bubble.

In December 2022, The Center for Countering Digital Hate published Deadly by Design: TikTok pushes harmful content promoting eating disorders and self-harm into young users’ feeds. Many more reports can be found online and some are referenced in my presentation.

More recently, alarms are being raised because of the global explosion in the sextortion of boys, minors to young adults. On February 6 2024, Canada’s RCMP released a statement, “Sextortion — a public safety crisis affecting our youth” reporting that “According to Cybertip.ca, Canada’s tip line, 91% of sextortion incidents affected boys.” On April 29 2024, just after I gave this talk, the UK’s National Crime Agency issued an unprecedented warning on the rise in sextortion for boys:

“All age groups and genders are being targeted, but a large proportion of cases have involved male victims aged between 14-18. Ninety one per cent of victims in UK sextortion cases dealt with by the Internet Watch Foundation in 2023 were male.”

The good news is that for the first time since I started researching and documenting online harms to minors in 2017, there is now a much wider understanding of the degree and nature of harms, and the mechanisms that contribute to those harms.

We had a fantastic Q & A with questions from students, the first being: “Do you think social media has decreased attention spans?”

I flipped the question and asked the student, “Do you think social media has decreased your attention span?” and they answered emphatically, “YES” and we all laughed.

The second question from a student was, “What do you think of the TikTok ban?”

My response was that I was less concerned with TikTok, in particular, in the context of the standard for-profit drivers of tech companies. I did point to the differences between TikTok and Douyin, its “sister company” in China, as the latter has significantly stricter content restrictions in “teenager mode.”

In 2018, “Douyin introduced in-app parental controls, banned underage users from appearing in livestreams, and released a “teenager mode” that only shows whitelisted content, much like YouTube Kids. In 2019, Douyin limited users in teenager mode to 40 minutes per day, accessible only between the hours of 6 a.m. and 10 p.m. Then, in 2021, it made the use of teenager mode mandatory for users under 14” (Source: MIT Technology Review 2023).

Rather than focusing on the security risks, I suggested they think about these two very different use models and ask why the Chinese parent company, Bytedance, maintains much more restrictive limits on content for Chinese youth?

We also talked about Jonathan Haidt’s four tips for parents in The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness:

- No smartphones before high school.

- No social media before 16.

- Phone-free schools through collective action.

- More independence and agency in the “real” world.

Haidt’s tips are being much debated and how these might be enacted will depend on parents, communities, and schools as, without question, there are kids who might depend on smartphones for specialized learning, as one counter example.

Jonathan Haidt on Smartphones vs. Smart Kids

What I would add though is the need for an intervention in the system of “surveillance capitalism” (Shoshana Zuboff, 2019), and push for an absolute restriction against the digital profiling or ad-targeting of minors, with anyone under 16 being fully removed from the systems of data capture. Teens, parents, caregivers, and educators will always be playing catch-up to constant up-dates, changing terms, and new features rolled up by tech companies. As such, again, the burden of responsibility can’t be on the users of these technologies as the asymmetry of power and resources is too great.

One model Canadians could look to is the EU’s Digital Services Act (DSA) which mandates “Zero tolerance on targeting ads to children and teens and on targeting ads based on sensitive data” (from the European Commission website):

“The DSA bans targeted advertisement to minors on online platforms. [Very Large Online Platforms] VLOPs have taken steps to comply with these prohibitions. For example, Snapchat, Alphabet‘s Google and YouTube, and Meta’s Instagram and Facebook no longer allow advertisers to show targeted ads to underage users. TikTok and YouTube now also set the accounts of users under 16 years old to private by default.”

As Canadian laws are substantially behind the EU and the US (proposed and passed), we should push for a rights-based approach that puts children before profits. Given that “Very Large Online Platforms” or VLOPs now have to align with the EU’s zero tolerance model to safeguard minors, there is no systems barrier to that being implemented here in Canada.

This shift will take collective, community action to pressure our governments and legislators for similar safeguards. Right now, we in Canada have a substantially better shot at achieving this goal as American legislators are also pushing for greater transparency, rights, and regulations from Big Tech.

“Children not profits.” It should be that simple.

UNICEF Policy guidance on AI for children 2021

See also my earlier posts, “We street-proof our kids. Why aren’t we data proofing them?” (October 2, 2019).

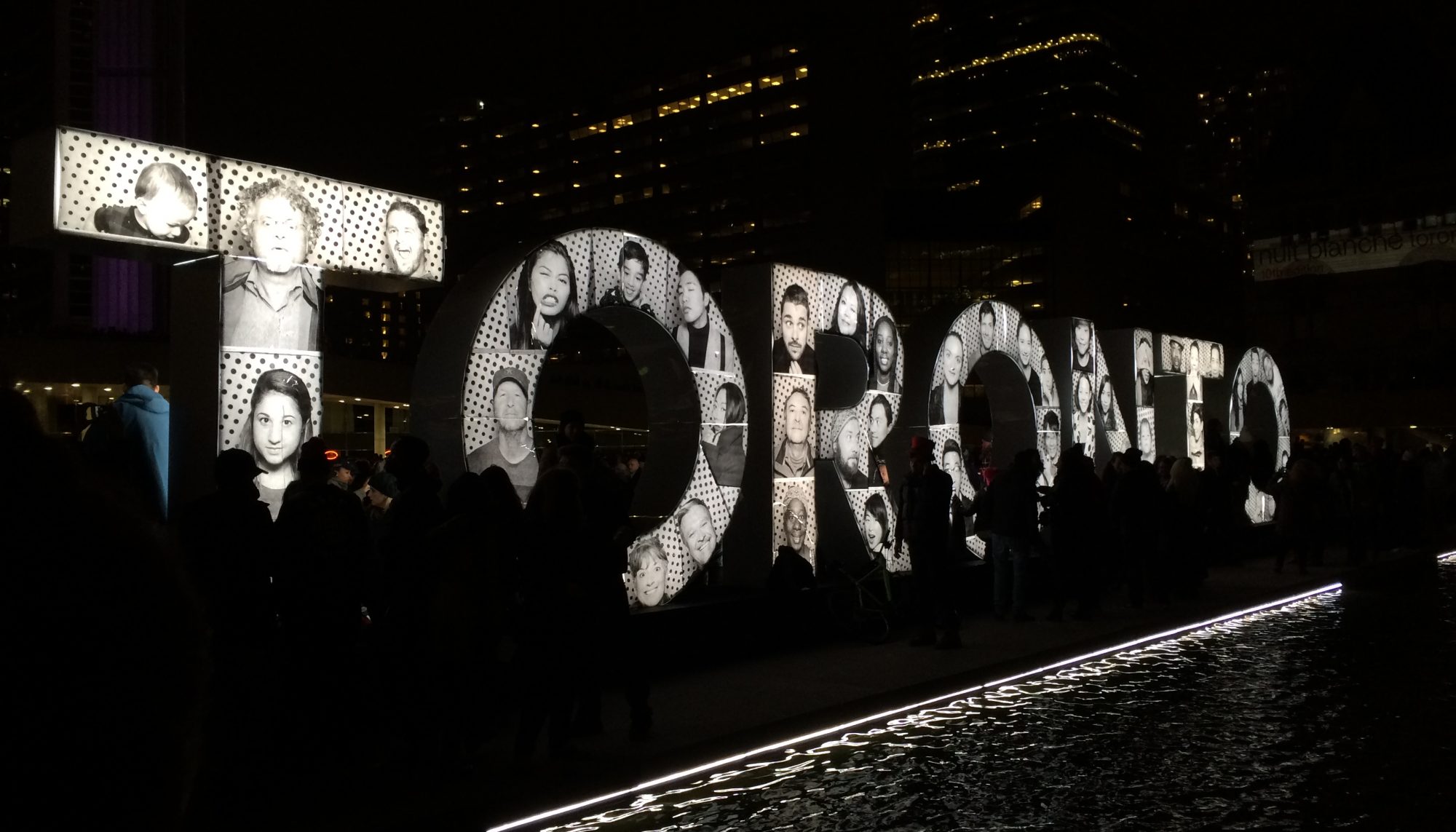

And, “Can We Trust Alphabet & Sidewalk Toronto with Children’s Data? Past Violations Say No.” (June 6, 2019).